Frequently asked questions

If this page does not answer your question, please refer to our support channels.

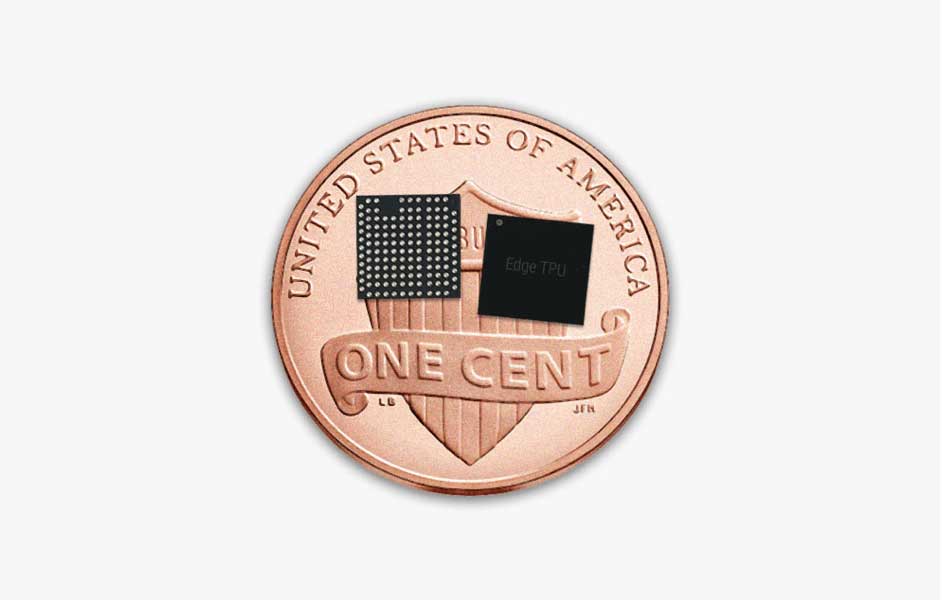

What is the Edge TPU?

The Edge TPU is a small ASIC designed by Google that provides high performance ML inferencing for low-power devices. For example, it can execute state-of-the-art mobile vision models such as MobileNet V2 at almost 400 FPS, in a power efficient manner.

We offer multiple products that include the Edge TPU built-in.

What is the Edge TPU's processing speed?

An individual Edge TPU can perform 4 trillion (fixed-point) operations per second (4 TOPS), using only 2 watts of power—in other words, you get 2 TOPS per watt.

What kind of real-world performance does it actually provide?

Check out our inferencing benchmarks here.

How is the Edge TPU different from Cloud TPUs?

They're very different.

Cloud TPUs run in a Google data center, and therefore offer very high computational speeds—an individual v3 Cloud TPU can perform 420 trillion floating-point operations per second (420 teraflops). Cloud TPUs are ideal when you're training large, complex ML models—for example, models that might take weeks to train on other hardware can converge in mere hours on Cloud TPUs.

Whereas, the Edge TPU is designed for small, low-power devices, and is primarily intended for model inferencing (although it can accelerate some types of transfer learning). So although the computational speed of the Edge TPU is a fraction of the speed on a Cloud TPU, the Edge TPU is ideal when you want on-device ML inferencing that's extremely fast and power-efficient.

For more information about Cloud TPUs, visit cloud.google.com/tpu.

What machine learning frameworks does the Edge TPU support?

TensorFlow Lite only.

What type of neural networks does the Edge TPU support?

The first-generation Edge TPU is capable of executing deep feed-forward neural networks (DFF) such as convolutional neural networks (CNN), making it ideal for a variety of vision-based ML applications.

For details on supported model architectures, see the model requirements.

How do I create a TensorFlow Lite model for the Edge TPU?

You need to convert your model to TensorFlow Lite and it must be quantized using either quantization-aware training (recommended) or full integer post-training quantization. Then you need to compile the model for compatibility with the Edge TPU.

For more information, read TensorFlow models on the Edge TPU.

We also offer several Colab notebook tutorials that show how to retrain models with your own dataset.

Can I use TensorFlow 2.0 to create my model?

Yes, you can use TensorFlow 2.0 and Keras APIs to build your model, train it, and convert it to TensorFlow Lite (which you then pass to the Edge TPU Compiler).

However, if you're using the Edge TPU Python API, beware that the API requires all input data use uint8 format, while the TensorFlow Lite converter v2 leaves input and output tensors in float. So if you're using TensorFlow 2.0 to convert your model to TensorFlow Lite, you must instead use the TensorFlow Lite API. For more information, read about float input and output tensors.

Can the Edge TPU perform accelerated ML training?

Yes, but only to retrain the final layer. Because a TensorFlow model must be compiled for acceleration on the Edge TPU, we cannot later update the weights across all the layers. However, we do provide APIs that perform accelerated transfer learning in two different ways:

- Backpropagation that updates weights for just the final fully-connected layer, using a cross-entropy loss function.

- Weight imprinting that uses the image embeddings from new data to imprint new activation vectors in the final layer, which allows for learning new classifications with very small small datasets.

For more information about both, read about transfer learning on-device.

What's the difference between the Dev Board and the USB Accelerator?

The Coral Dev Board is a single-board computer that includes an SOC and Edge TPU integrated on the SOM, so it's a complete system. You can also remove the SOM (or purchase it separately) and then integrate it with other hardware via three board-to-board connectors—even in this scenario, the SOM contains the complete system with SOC and Edge TPU, and all system interfaces (I2C, MIPI-CSI/DSI, SPI, etc.) are accessible via 300 pins on the board-to-board connectors.

Whereas, the Coral USB Accelerator is an accessory device that adds the Edge TPU as a coprocessor to your existing system—you can simply connect it to any Linux-based system with a USB cable (we recommend USB 3.0 for best performance).

What software do I need?

The software required to run inferences on the Edge TPU depends on your target platform and programming language. In it's simplest form, all you need is the Edge TPU runtime installed on your host system, plus the TensorFlow Lite Python API. Other options are available, including APIs for C/C++.

For more information, read the Edge TPU inferencing overview.

Can I buy just the Edge TPU chip?

Not exactly. If you're building your own circuit board, you can integrate our Accelerator Module. This is a surface-mounted module (10 x 15 mm) that includes the Edge TPU and all required power management, with a PCIe Gen 2 and USB 2.0 interface. For existing hardware systems, you can also integrate the Edge TPU using our PCIe or M.2 cards—for details, see our products page.

If you have other specific requirements, please contact our sales team and we will be happy to discuss possible solutions.

Is this content helpful?